Quality in software is a multifaceted concept, closely tied to both conformance to requirements and fit for purpose. In the life sciences industry, where software permeates everything from the drug discovery process to pharmaceutical supply chain management, the need for rigorous fit-for-purpose evaluations has never been more critical.

As pharmaceutical regulations increasingly intersect with software development standards, the traditional approach to Computer System Verification (CSV) is falling short. According to the U.S. Food & Drug Administration’s proposed Computer System Assurance (CSA) guidelines, the old methods of unit tests and standardized manual and automated testing are no longer adequate for ensuring both solution security and healthcare outcomes.

The good news? Advances in artificial intelligence (AI) and machine learning (ML) technologies offer a promising avenue for streamlining CSA-recommended unscripted testing. These technologies can enhance the robustness and efficiency of your testing processes, ensuring that your software meets regulatory standards and delivers on its intended purpose effectively and securely.

Computer System Assurance Recommendations

One major stipulation the CSA framework emphasizes is increasing testing based on risks. For instance, consider the following scenarios:

- If a requirement can impact life when implemented incorrectly, then that requirement must have a higher risk.

- If a misinterpretation exists between engineers and testers on the solution developed, then such requirements will have higher risks on the entire lifecycle.

- If a test case has many parameters (responsive user interface, portrait/landscape orientation, placement of user interface (UI) elements based on screen resolution, etc.) that render the solution differently on every device, then such test cases may miss out on edge cases (aka extreme or out-of-ordinary scenarios).

To address such issues, CSA promotes unscripted testing, where the tester comes up with test cases that do not have prescribed test steps. These test cases are highly dynamic and unique to the functionality being released. Simultaneously, these test cases are also based on the “testers’ deep knowledge” of the system being developed and the processes being applied. Here is where the AI/ML-based technologies that continuously build knowledge through structured, semi-structured, and unstructured learning can help!

Using AI/ML Technology

To effectively use AI/ML technology, we need to review the entire software development lifecycle. Accordingly, multiple use cases exist for these emerging technologies.

1. Crafting Requirement Scenarios for Optimized Value Delivery

The process of generating requirements is typically spearheaded by those who serve as the voice of the customer—be it the Business Analyst, Project Manager, or Product Owner. However, these roles are not immune to biases or misinterpretations, which can result in overlooked end-user requirements. For example, insights from healthcare providers (HCPs) might be filtered through a medical science liaison’s interpretations, leading to gaps in the captured requirements.

However, AI/ML technologies can employ deep learning algorithms to analyze notes and voice recordings, thereby identifying and capturing missed functional and non-functional requirements. This ensures a more comprehensive and accurate set of requirements, leading to software solutions that are better aligned with end-user needs and expectations.

2. Identifying Risks that Impact Requirements Across All Facets

While technical and schedule risks often come to light during the delivery phase, market and business risks emanating from other business units may go unnoticed by the project team. This oversight can significantly impact the project’s success and the ultimate value delivered to the end user.

AI and ML technologies offer a solution to this challenge. These advanced tools can identify risks unique to your industry, company, or specific project by analyzing data from project retrospectives, risk registers, and issue logs. This enables a more comprehensive risk assessment, allowing you to address potential challenges before they escalate.

3. Prioritizing Projects and Requirements Based on Comprehensive Risk Analysis

Building on the previous use case, the aggregate risk exposure from multiple sources can significantly impact project requirements. AI/ML technologies can leverage this cumulative risk data to prioritize both project initiatives through opportunity cost evaluations and individual requirements within an approved project. This data-driven approach enables more effective resource allocation and objective prioritization of requirements, all while maintaining a focus on conformance quality.

4. Enhancing Exploratory Unit Test Case Development with AI/ML Technologies

Traditionally, exploratory testing has been reserved for testers who rely on prior knowledge to check the system for potential failures. This approach often increases the cost of identifying and fixing defects. However, AI/ML technologies offer a more proactive solution. These advanced tools can identify exploratory unit test cases during the development stage, targeting specific areas such as variable initialization, object handling, code coverage, and memory leaks.

5. Generating and Prioritizing Test Cases Based on Individual Risks

AI/ML technologies can significantly enhance the confidence level in quality testing by generating a multitude of test cases tailored to requirement complexity and associated delivery risks. These AI/ML-generated test cases complement manually identified ones, supporting risk-based testing approaches that cover edge cases that might otherwise be overlooked.

6. Creating Test Cases for Edge Cases Based on Defect Density

Missed and misinterpreted requirements can inflate business costs. AI/ML technologies can mitigate this by analyzing unit testing coverage and defect density (i.e., the number of defects logged against a requirement). This analysis helps identify additional test cases that should be run across multiple parameters and environments.

7. Generating Test Data for Prioritized Scenarios

One of the challenges testers face is the creation of test data for diverse scenarios. Since the quality of test data directly influences test outcomes, AI/ML technologies can assist in generating large datasets. This data generation, akin to Monte Carlo simulation methods, not only alleviates the burden on manual testing but also identifies test cases that are prime candidates for automation.

8. Recommending and Implementing Automation Scenarios

In the context of regression testing, AI/ML technologies can monitor the execution status of test cases across various releases. This monitoring provides actionable insights, such as recommending test cases for automation; breaking down test cases into smaller, reusable modules; and even generating automation scripts for review by automation engineers.

9. Recommending and Implementing AI/ML-Enabled Monitoring Solutions for System Health

The key challenge in product development is continuously monitoring system health in a production environment. This task is complex, requiring intricate knowledge of the production system and specific access privileges for developers to implement solutions and for testers to validate them. AI/ML technologies offer a streamlined approach by creating health alert monitors that analyze event triggers, database usage, and network alerts. These monitors can proactively recommend when computerized systems, particularly those used in life sciences, require recalibration or other adjustments.

10. Streamlining Documentation with AI/ML Technologies

Documentation is a critical but often cumbersome aspect of software development and maintenance. AI/ML technologies can review both user and system documentation, employing language models to identify and fill gaps. These technologies can rewrite documentation for easier comprehension and even generate media-rich documentation, such as converting text into video formats.

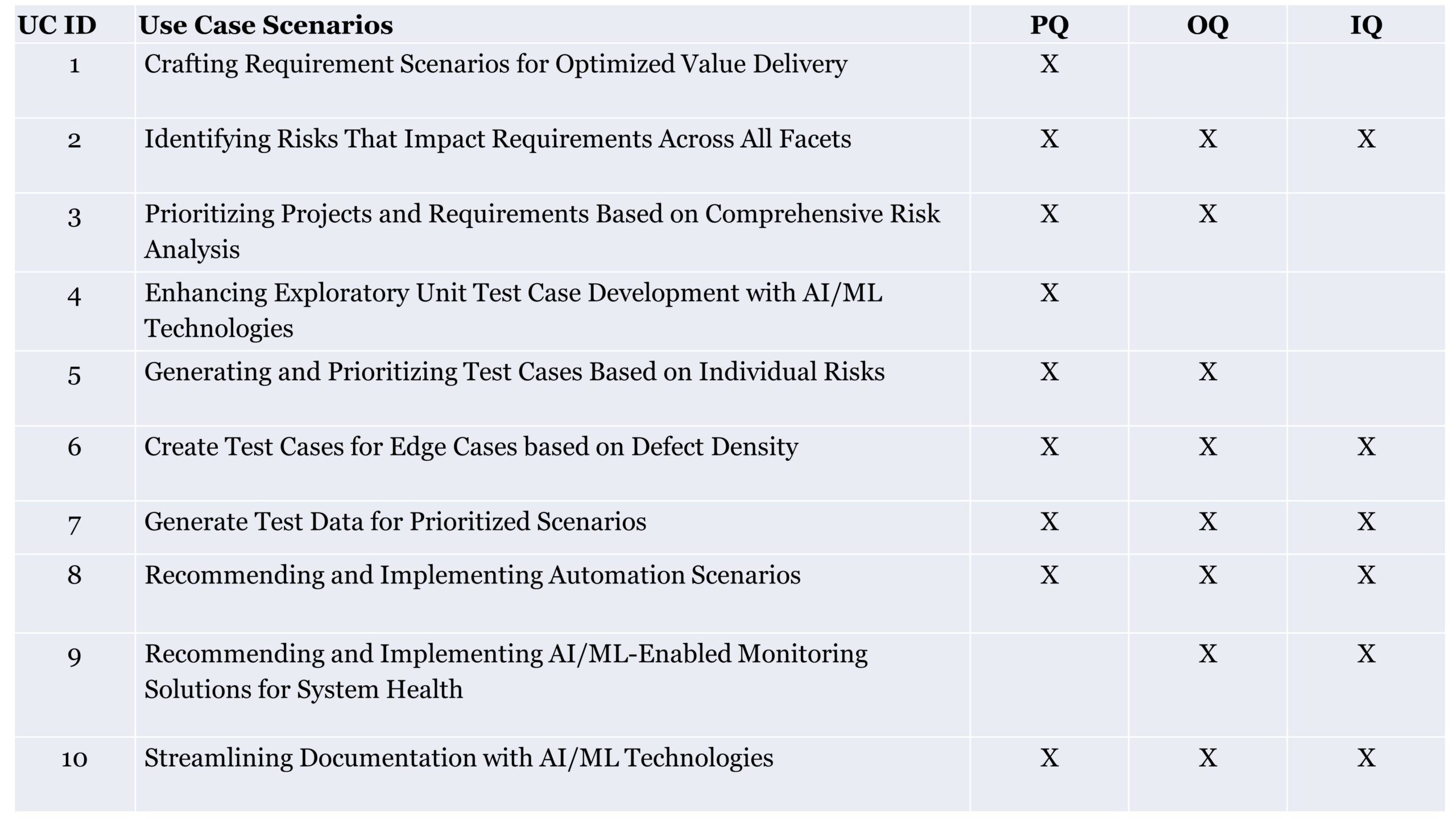

Mapping Scenarios to Life Sciences Assurance Criteria

Each of the identified scenarios directly correlates with the critical assurance qualifications commonly used in the life sciences industry: Performance Qualification (PQ), Operational Qualification (OQ), and Installation Qualification (IQ). These qualifications serve as benchmarks to ensure not just conformance to scope but also fitness for purpose.

Below is a tabulation of how these scenarios map to PQ, OQ, and IQ:

Conclusion

Conclusion

As technology continues to evolve at an accelerated pace, early adoption and strategic utilization become imperative. Implementing each use case necessitates a coordinated approach. When these use cases are progressively elaborated—individually or in combination—they significantly enhance the confidence and accuracy that life sciences solutions demand in the software being developed or deployed.